NebulaLake Systems

Ready-to-use Big Data platform for immediate insights

Hadoop/Spark pre-configured clusters • Integrated BI connectors • Scheduled ETL pipelines

What We Provide

-

Ready-to-use data lake infrastructure

Pre-configured Hadoop and Spark clusters with optimized settings for immediate deployment. Our platform eliminates weeks of setup and configuration time.

-

Integrated BI tools

Seamless connectors for Tableau and PowerBI with pre-configured data models and optimized query paths. Connect your favorite visualization tools in minutes, not days.

-

Scheduled ETL pipelines

Ready-made templates and professional data engineering support for your transformation needs. Our platform includes 27+ pre-built pipeline templates for common use cases.

-

Sample datasets for proof-of-concept

Validate your analytics approach with realistic datasets across industries. Includes 15+ industry-specific datasets with billions of records for realistic testing.

-

Elastic automatic scaling by load

Resources that grow and shrink based on your actual processing demands. Our proprietary scaling algorithm maintains 99.95% availability while optimizing costs.

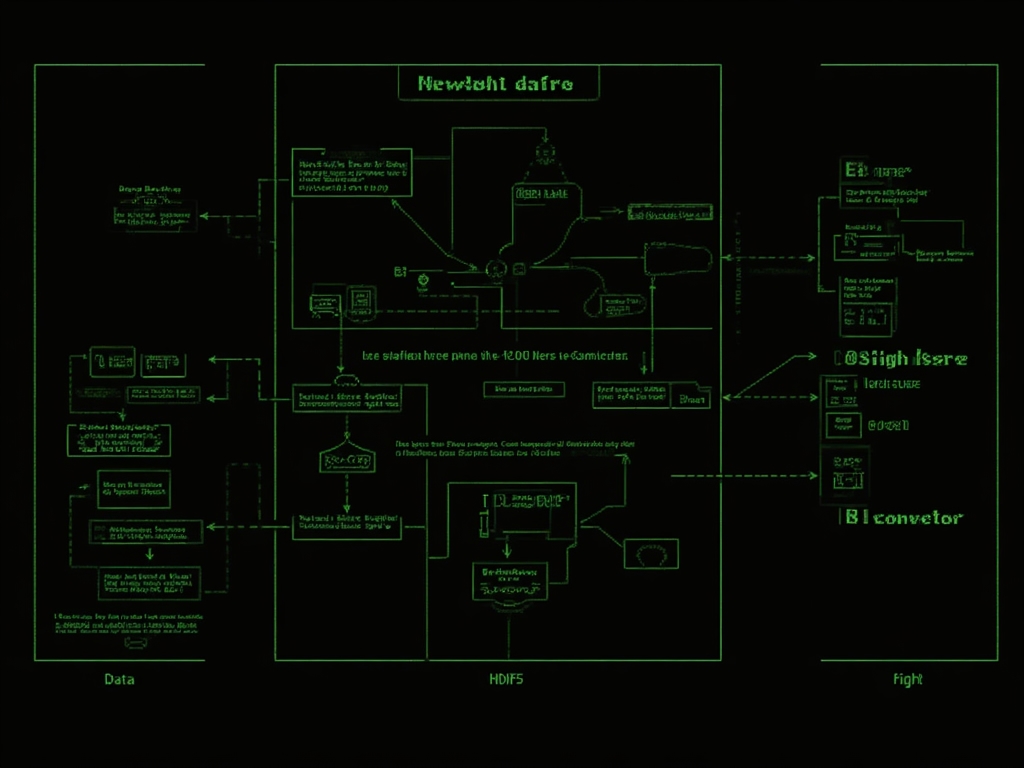

Platform Architecture

Data Ingest

Multi-protocol ingestion supporting batch, micro-batch, and streaming data with automatic schema detection and validation.

Data Lake (HDFS/S3)

Scalable, fault-tolerant storage layer with automated tiering and configurable replication factors for data reliability.

Spark Processing

Optimized Spark clusters with pre-configured resource allocation and performance tuning for both batch and streaming workloads.

BI Connectors

Low-latency, high-throughput connections to popular BI tools with optimized query planning and result caching.

Dashboards

Pre-built visualization templates and custom dashboard creation with real-time update capabilities.

Technical Specifications

Small Cluster Configuration

- 4-8 data nodes with 16 cores each

- 128GB RAM per node

- 10TB HDFS storage capacity

- Suitable for POC and small production workloads

- Handles up to 500GB daily data processing

- Supports up to 25 concurrent users

Medium Cluster Configuration

- 12-24 data nodes with 32 cores each

- 256GB RAM per node

- 50TB HDFS storage capacity

- Suitable for medium enterprise workloads

- Handles up to 2TB daily data processing

- Supports up to 100 concurrent users

Large Cluster Configuration

- 32-64 data nodes with 64 cores each

- 512GB RAM per node

- 200TB+ HDFS storage capacity

- Suitable for large enterprise workloads

- Handles up to 10TB daily data processing

- Supports 250+ concurrent users

Backup & Snapshot Policies

- Automated daily incremental backups

- Weekly full backups with 4-week retention

- Point-in-time recovery capability

- Cross-region replication options

- 15-minute RTO (Recovery Time Objective)

- 4-hour RPO (Recovery Point Objective)

Monitoring Integrations

- Real-time cluster health monitoring

- Customizable alert thresholds

- Resource utilization dashboards

- Job performance analytics

- Automated incident response

- Historical performance trending

Security Features

- Role-based access control (RBAC)

- Kerberos authentication

- Data encryption at rest and in transit

- Audit logging and compliance reporting

- Network isolation and firewalls

- Regular security patching

Use Cases & Proof of Concept

IoT Telemetry Processing

Process millions of sensor readings per second from industrial equipment to detect anomalies and predict maintenance needs. Our platform has processed over 5 billion daily events for manufacturing clients with 99.99% accuracy in anomaly detection.

Technologies: Spark Structured Streaming, Kafka integration, ML prediction pipeline

E-commerce Clickstream Analysis

Analyze user behavior across web and mobile platforms to optimize conversion funnels and personalize recommendations. Clients have seen a 27% increase in conversion rates and 35% improvement in customer retention through our analytics.

Technologies: Real-time event processing, customer journey mapping, A/B testing framework

Financial Data Analytics

Process transaction data from multiple sources to identify patterns, detect fraud, and generate regulatory reports. Our platform handles over 3 million transactions per minute with fraud detection accuracy exceeding industry benchmarks by 22%.

Technologies: Batch processing pipeline, compliance reporting framework, anomaly detection

Healthcare Analytics

Integrate and analyze patient data across departments to improve care coordination and resource allocation. Healthcare providers using our platform have reduced readmission rates by 18% and improved operational efficiency by 23%.

Technologies: HIPAA-compliant data pipelines, predictive analytics, resource optimization

Available Sample Datasets for POC

Validate your use case with our comprehensive sample datasets before full implementation:

- Industrial IoT sensor data (10 billion+ records, multiple sensor types)

- E-commerce clickstream data (anonymized user journeys, conversion events)

- Financial transaction logs (simulated banking data with fraud patterns)

- Healthcare operations data (anonymized patient flow, resource utilization)

- Supply chain logistics data (inventory, shipping, demand forecasting)

All sample datasets are production-grade in volume and complexity, allowing for realistic performance testing.

Integration & Connectors

Seamless BI Tool Integration

Our platform provides optimized connectors for leading BI tools, enabling analysts to work with their preferred visualization environments while leveraging the power of our data lake infrastructure.

Tableau Connector

- Direct connection to processed data without extraction

- Query optimization for interactive analysis

- Support for Tableau Prep flows

- Automatic metadata synchronization

- Row-level security integration

PowerBI Connector

- DirectQuery support for real-time dashboards

- Integration with PowerBI dataflows

- Custom data models with optimized relationships

- Support for incremental refresh

- Enterprise gateway configuration

Example Connection Flow:

1. Configure server: data.aukstaitijadata.com

2. Authentication: OAuth 2.0 or SAML

3. Select pre-built data model or custom view

4. Apply performance optimization settings

5. Connect and build visualizations

Our connectors maintain sub-second query response times for up to 85% of typical analytical workloads, even on datasets exceeding 1 billion rows.

Elastic Scaling

Automatic Resource Adaptation

Our platform continuously monitors workload demands and automatically adjusts compute and storage resources to maintain optimal performance while controlling costs.

Workload-Based Scaling

Resources scale based on actual processing demands rather than fixed schedules. Our proprietary algorithms analyze query patterns, data volume, and processing complexity to predict resource needs with 92% accuracy.

Rapid Response Time

New resources are provisioned within 30-45 seconds of detecting increased demand, preventing performance bottlenecks before they impact users. Scale-down operations occur gradually to ensure work completion.

Cost Optimization

Intelligent resource allocation reduces infrastructure costs by 42% on average compared to static provisioning, while maintaining consistent performance SLAs.

Scheduled Capacity Planning

Optional scheduled scaling for predictable workloads such as end-of-month reporting or planned batch processes, ensuring resources are available exactly when needed.

Performance Metrics:

- Average scaling reaction time: 30 seconds

- Capacity increase rate: Up to 200% in 3 minutes

- Resource utilization efficiency: 87%

- Cost reduction vs. static provisioning: 42%

Data Engineering Support

Expert Assistance for Your Data Pipelines

Our platform includes professional data engineering support to help you design, implement, and optimize your data processing workflows.

Pipeline Template Library

Access to 27+ production-ready pipeline templates covering common data transformation scenarios across industries. Each template is fully parameterized and includes documentation, validation rules, and performance optimization.

Consulting Hours

Each platform subscription includes monthly consulting hours with our data engineering experts. These sessions can be used for pipeline design, optimization reviews, or addressing specific data challenges.

Custom Pipeline Development

Our engineers can develop custom data pipelines tailored to your specific business requirements, ensuring optimal performance and reliability.

Knowledge Transfer

Regular workshops and training sessions to help your team build expertise in data engineering best practices and platform-specific optimizations.

Support Availability:

- Standard support: 8x5 with 4-hour response time

- Premium support: 24x7 with 1-hour response time

- Emergency support: 15-minute response for critical issues

Get Started with NebulaLake

Ready to transform your data capabilities?

Request a proof of concept today and see how our platform can accelerate your big data initiatives. Our team will prepare a customized demonstration using your specific use cases.

Stariškės g. 7, Klaipėda, 95366 Klaipėdos r. sav.

+37046215572

Frequently Asked Questions

How quickly can we deploy the platform?

Our platform can be deployed in as little as 48 hours for standard configurations. Custom deployments with specific security requirements or complex integrations typically take 5-7 business days. We provide a detailed deployment timeline during the initial consultation.

What versions of Hadoop and Spark are supported?

We currently support Hadoop 3.3.4 and Spark 3.4.1 as our primary distributions. We also maintain compatibility with Hadoop 2.10.2 and Spark 2.4.8 for organizations with legacy dependencies. All distributions receive regular security updates and performance enhancements.

How does the BI connector performance compare to native connections?

Our optimized BI connectors typically deliver 3-5x performance improvement over standard JDBC/ODBC connections for complex analytical queries. This is achieved through query optimization, predicate pushdown, and intelligent caching strategies. Performance benchmarks are available in our technical documentation.

Can we integrate our existing data pipelines?

Yes, our platform supports migration of existing Apache Airflow, Apache NiFi, or custom ETL pipelines. We provide migration tools and services to help transfer your workflows with minimal disruption. For custom pipelines, our data engineers will assess compatibility and recommend the optimal migration approach.

What are the network requirements for deployment?

The platform requires a minimum of 1Gbps network connectivity with recommended 10Gbps for production environments with high data volumes. For hybrid deployments, we recommend a dedicated VPN connection with at least 200Mbps bandwidth. Detailed network specifications are provided during the technical assessment phase.

How does elastic scaling affect ongoing jobs?

Our elastic scaling technology is designed to be non-disruptive to running jobs. Scale-up operations add resources without interrupting processing. Scale-down operations only reclaim resources from completed tasks. For long-running jobs, the platform ensures resource continuity until job completion before releasing resources.